OK. Time for a bit of

abductive reasoning. I'm imagining a future shared virtual environment for the augmentationists.

I'm assuming that Second Life has become the best immersionist solution - great for role-play (fantasy identities, not spoilt by voice), content creation etc. So no need to bother trying to compete in these areas. The thing that I'm imagining is a 3D conferencing tool, with voice as the central communication device, and shared applications such as browsers and whiteboards to facilitate discussion, ideas generation and collaborative working.

So, let's pretend I'm a student. My tutor has given me a web-link to a Java Web-start application. The first time I run it, it checks my PC spec and installs all the necessary bits on my computer. My user ID (taken from BANNER or whatever standard is in place for student IDs) is already on the database, so I just need to log in using this and my usual password. As this is the first time I have logged in, I need to first create my avatar. The application checks to see if I have a functioning webcam, and if so, allows me to take a snapshot of myself. If I have no webcam, I have the option of uploading a mug-shot instead. I click a few points on the snapshot to calibrate my face, and click 'Generate'. A 3D face is created and uploaded to the server. Now I log into the virtual world, and my avatar is my 3D face (

do we need bodies in virtual worlds? I went to a virtual reality conference about 15 years ago, and one of the speakers was dead against avatars having legs).As I wander around this 3D world, I see other faces that I recognise. I wander up to them and say 'Hello!', with my voice. They say 'Hello!' back, with their familiar voices. I click on a 'Smiley' button and my 3D face smiles at them. I can see what they are looking at by the orientation of their 3D faces. We walk up to a giant web browser and I key in a URL. My friend admires my new artwork that I have navigated to via the browser. He clicks on an 'impressed' button and his 3D face's expression morphs into an impressed looking version of himself. He has an idea, and draws it on the whiteboard next to the browser. We agree to meet up for a drink later to discuss our new ideas.

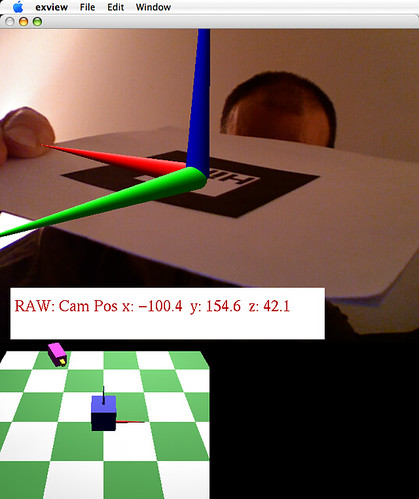

Looking a little further into the future, instead of a fixed 3D face, my 3D webcam places a live hologram of me into the virtual environment, massively enhancing communication via non-verbal cues. I interact with the environment by waving my arms about, maybe.